NAACL 2019 Summary

Proofpoint sent me to NAACL 2019, which was my first time attending an NLP conference. I have a few main takeaways that I wanted to share!

Transfer Learning Tutorial

Sebastian Ruder and his co-authors from AI2, CMU, and Huggingface marched through 220 slides with practical tips and tricks for applying this new class of Transformer-based language models, notably BERT, to particular target tasks. I give my brief summary of it below in case you don’t have 4 hours to re-watch the tutorial.

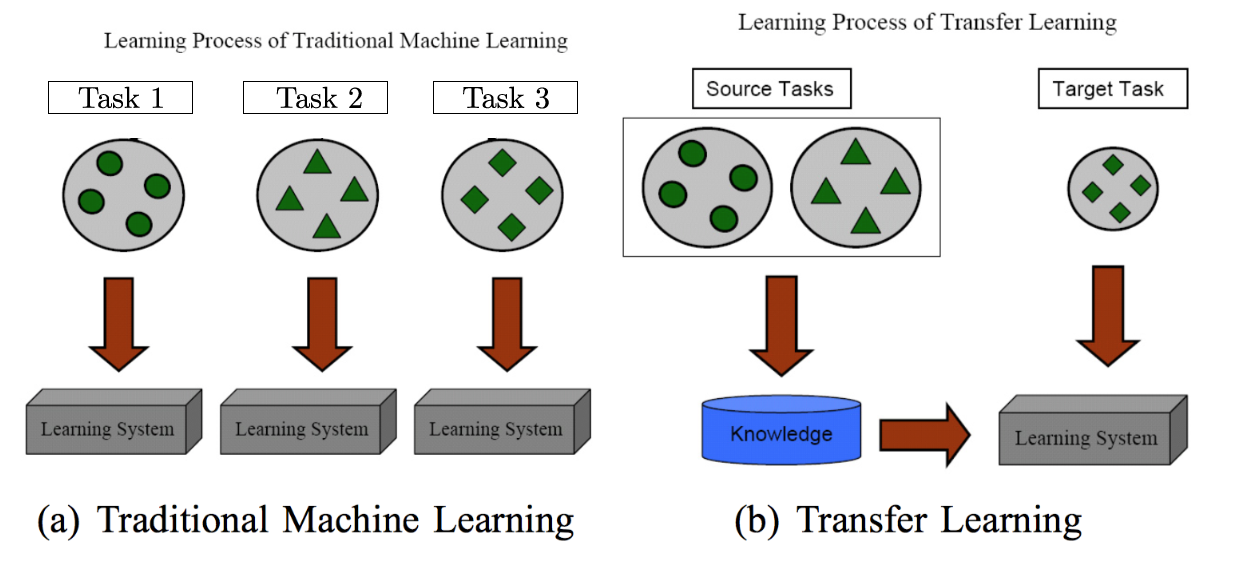

The goal of transfer learning is to improve the performance on a target task by applying knowledge gained through sequential or simultaneous training on a set of source tasks, as summarized by the diagram below from A Survey of Transfer Learning:

There are three general keys to successful transfer learning: finding the set of source tasks that produce generalizble knowledge, selecting a method of knowledge transfer, and combining the generalizable and specific knowledge. Learning higher order concepts that are generalizble is crucial to the transfer. In image processing, those concepts are lines, shapes, patterns. In natural language processing, those concepts are syntax, semantics, morphology, subject verb agreement.

Finding the right set of source tasks is important! Language modeling has been the task of choice for a while now. The transfer medium has been maturing over the years. Word2vec and skip thoughts stored knowledge in a produced vector, but now language models are the generalized knowledge. Quite the paradigm shift! Contextual neural models on language modeling tasks then require the slow introduction of target-specific language.

Finally, how to optimize these models? A variety of techniques and methods were proposed:

- Freezing all but the top layer. Long et al Learning Transferable Features with Deep Adaptation Networks

- Chain-thaw, training on layer at a time Using millions of emoji occurrences to learn any-domain representationsfor detecting sentiment, emotion and sarcasm

- Gradually unfreezing Howard et al Universal Language Model Fine-tuning for Text Classification

- Sequential unfreezing with hyperparameter tuning Felbo et al https://arxiv.org/pdf/1902.10547.pdf

Probing Language Models

Researchers are only beginning to develop the tooling necessary to understand these large models. Here are some papers that highlight this research effort:

- Visualizing and Measuring the Geometry of BERT Blog post

- Understanding Learning Dynamics Of Language Models with SVCCA

- A structural Probe for Finding Syntax in Word Representations

- Attention is not Explanation

- The emergence of number and syntax units in LSTM language models

- Neural Language Models as Psycholinguistic Subjects: Representations of Syntactic State

BERT

BERT won best paper, which was no surprise. Because of the impact of preprints, ELMo felt like old news by the time the conference actually arrived. It resulted in a dissonance between what many of the papers were adapting (ELMo) and what the state-of-the-art was at the moment (BERT).